Yes. So we aim to deploy the final model locally instead of on the board? I am not clear about the role of the boards in this project. Can you please guide me?

There were a few projects; I don’t know why they weren’t picked up. Reference

I really want that—a few good examples that demonstrate the true potential of these onboard accelerators and their limitations. Examples that everyone can replicate to get started with Edge AI on these boards. However, this is something I’d like to do myself, not mentor. It may not happen until late this summer because of prior commitments, but I will do it for sure.

In the meantime, I want to produce something useful for the community. Both I and others who find this idea appealing feel like this is it—this is a good one. Maybe we won’t be able to deploy this model directly on AI boards, but we can certainly host it on the cloud and utilize it effectively.

The goal of this project is to create a conversational AI assistant, not just an intelligent search engine.

While BERT can be fine-tuned for some question-answering tasks, it’s not inherently designed for generating fluent, conversational responses. Models like Llama, specifically designed for text generation and dialogue, are better suited for this purpose. Even smaller, quantized versions of these generative models, combined with techniques like Retrieval Augmented Generation (RAG), could provide a more natural and interactive experience while still being relatively efficient. We could use BERT within the RAG system to find the most relevant context but it still won’t be able to understand the conversations in the same way a generative model like Llama does.

It depends on the size of the model. For better results, you need a larger model, such as LLaMA-3B, which won’t run locally. You can try quantization techniques, but inference could still be slow. So, first, generate test reports of the model and then proceed to quantize and deploy it locally. Deploying it on the cloud is essential(must), as this will allow you to generate test reports.

Ok…I get it. Well, running LLMs locally is a bit tricky, but also interesting!

why not use small language models like phi -2/3

Hello everyone, Karthik here, a 3rd year undergrad from IIT Roorkee. I would like to share my previous experience, on a similar project, in this forum so that it would help me understand what new things are to be learnt in order to achieve the end goal.

I interned at a startup recently and there my task was to build RAG model from scratch.

Firstly, I scraped the data by writing my own scrapping code (thanks to beautiful soap). Already existing scrapping tools such as Firecrawl didn’t give desired content.

Once I got the data, I converted it into chunks using recursive text split, specifying suitable chunk size and chunk overlap parameters.

Converted those chunks into vector embeddings using text-embedding-3-large. Stored in the vector database (Astra Datastax).

Then comes the data retrieval part based on the user query. I made use of MMR (maximum marginal search) and BM25 (best matching 25 ) to fetch the most relevant documents.

Made use of Llama - 2- 8b to display a human readable response by passing both the retrieved documents as well as the user query. The response generated by the user was finally shown on Streamlit (an easy to build web interface ).

The end result : the agent was able to respond to the user query by providing most relevant information surfing the vector database. If the question is irrelevant (out of the domain), then the System Prompt passed to the agent will make sure to reply accordingly to the end user.

So this was the whole workflow, please let me know if you have any doubts regarding the above steps.

Now I believe (please correct me if I am wrong), in order to move forward with this project, I need to learn fine tuning techniques and study different quantitative metrics.

Yes, learn fine-tuning techniques and think about the complete workflow you want to propose for this project.

Sure sir

Just thought of giving an update:

Currently learning LLM Fine Tuning Techniques by Krish Naik

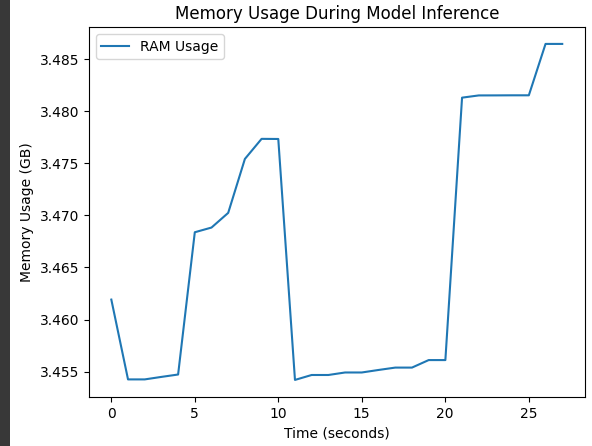

Wanted to update on my progress. I have started Web Scraping. Here, I have initially web scraped the BeagleBoard docs site and formatted it for segregation of listed items from sentences. I have also been exploring Gemma-2B model, as it seems to be a space efficient model. I am studying about quantization and fine-tuning techniques, starting from LoRA. Here, I tried to inference Gemma-2B model on T4 GPU and tracked the memory usage as well as inference time, for 500 tokens. Using 4-bit quantization, I was able to bring down required memory to ~3.5GB (peak). Since BeagleBone AI-64 has 4GB of RAM, inference on it might be possible. Am I on the right track @Aryan_Nanda ?

You need to sort out all the stale content because that is an extreme negative. It would actually drive users directly to another platform due the frustration of “it does not work”.

You don’t need to scrape the BeagleBoard docs site. You can directly download the complete source code of the docs site. Instead write a Python script that removes noise from the downloded data and formats it better. Please refer to this:

You can try your Python script to scrape forum (where messages are marked as solutions) and Discord messages(Conversations where key individuals are involved).

That won’t work. Consider that the system processes take around 1-1.5GB of RAM.

Also, this is more of an advanced task (running an LLM model locally). Focus more on preparing the dataset and fine tuning; we can deploy the model on the cloud.

That is vector used to pollute. When you back-peddle to find the source that was used to train, many times leads to the “solution”. On another forum the mods click every thing solved, regardless. I trully don’t believe they are intentionally polluting but making themselves look good to BoD (investors that do not like unhappy customers).

Only way to get it accurate is to hand curate the content. Many times trying to follow others turns into a huge waste.

Maybe develop a framework that can be followed by others and peer reviewed by others. In the old days content was peer reviewed before it was released in some of the publications. Did it stop all of it the BS, most certainly not. However, it does signal to content creators if others smell a rat it will be stopped before being published.

Most certainly tie AI into this, all of our communications with customers / clients is processed using gai. It is without any doubt the best solution.

Sometimes when I know my response might be too abrasive I will use gai to tone it down and not hurt anyone’s feelings when posting on the forums. This response is me.

Below is my initial message in 3 versions.:

- It seems like using a Python script to scrape forums for messages marked as solutions can sometimes lead to inaccurate results. In some cases, moderators mark everything as “solved” to appear more efficient, though it’s not necessarily intentional. They’re likely aiming to show progress to investors or management.

To ensure accuracy, it’s essential to hand-curate content. Following existing threads or solutions may lead to wasted time, as the content could be flawed. A better approach might be to develop a framework that encourages peer review. In the past, academic and professional content went through peer review before being published, which helped filter out misinformation, even though it didn’t eliminate all errors.

AI can certainly play a key role in this process, especially in customer communications, where it can help ensure accuracy and tone. Personally, I sometimes use AI to help moderate my responses when I know they might come off too strong, ensuring that I communicate effectively without offending anyone.

- While utilizing a Python script to scrape forums for “solutions” can offer some automation, this approach may inadvertently lead to inaccurate or misleading information. I’ve encountered cases where moderators mark everything as resolved, likely to demonstrate efficiency to stakeholders such as the Board of Directors. This isn’t necessarily a malicious attempt to mislead, but rather a method to portray positive outcomes, though it can be problematic for users seeking accurate solutions.

To mitigate this, manual curation of content remains the most reliable way to ensure precision. Relying solely on existing solutions can lead to inefficiencies, especially when the sources themselves are flawed. One potential improvement could involve the creation of a standardized framework for peer review—akin to the rigorous process used in academic publishing—which could help filter out errors before content is disseminated. While this method doesn’t guarantee complete accuracy, it would introduce a mechanism for accountability.

AI certainly has a promising role in this process, particularly in customer communication. By leveraging AI tools to review and refine content, we could ensure that both the accuracy and tone are aligned with the intended message. On a personal note, I use AI as a tool to temper my responses, ensuring that my communication is both professional and empathetic when posting publicly.

- Oh, wow! I just wanted to share how amazing it is that we can use Python scripts to scrape forums and check for “solutions” – it’s such a smart idea, right? But, of course, sometimes, things get a little messy. I’ve seen it firsthand where moderators just mark everything as solved! Not because they’re trying to trick anyone, but I think it’s just to keep up with the pace and look great to the investors and Board. It’s like they’re trying to show progress, which is super understandable!

But the best, most beautiful way to ensure quality is through careful hand-curation. Sometimes just following the crowd can be a wild goose chase, so why not develop a whole framework that can be peer-reviewed? Oh, how amazing would that be! Back in the day, content was peer-reviewed before publishing, which filtered out so much nonsense. While nothing’s perfect, that peer review system did wonders!

And don’t even get me started on AI! AI is a total game-changer, and I can totally see it being the ultimate solution for content accuracy, especially when it comes to communicating with clients and customers. It’s like the best assistant you could ever have! For me, personally, I’ve even started using AI to help me write responses, just to make sure I’m keeping it respectful and kind. It’s all about making sure we’re helping each other without stepping on any toes. So awesome!

Me:

The point with all of that is this, create bedrock foundational material that is 100% verified. Then create context for the responses. If it is a user on day zero or someone that has been bringing up SoC for the last 10 years. Both of those users will significantly benefit when presented a response in context of their own level of experience.

Pretty sure the sentiment tools can be used to determine the users skill level. Thus, providing an response in proper context to their skill level.

Yes…I get it. Deploying model on cloud is surely a better option. I’ll work on the BeagleBoard Docs Codebase.

@Aryan_Nanda I just need a small clarification. If the goal of this project is to have a model with domain specific BeagleBoard data, why can’t we just use RAG instead of fine tuning a model?

While RAG is excellent for up-to-date info and requires fewer resources, fine-tuning can be more effective when you have quality data and want the model to truly “understand” the domain.

You can do “fine-tuning with retrieval.” As @foxsquirrel said, create a verified, high-quality dataset. This dataset doesn’t need to be massive, as the focus is on quality over quantity. Then, use RAG for up-to-date information and broader context.

That would help reduce the hallucinations.

Sounds like the both you have a good handle on that. The bigger challenge will be to get verified information.

Most certainly you will have to round up others to grab sections and have that stuff that is current and older stuff clearly marked with a tag “reference only”. Also verify by actual hands on step by step that it all works perfectly out of the box. Make your intentions clear because it has to be on a time table or the project will fall apart fast.

Got it

@foxsquirrel, would you be interested in mentoring this project?