Deep-learning based commercial detection and real-time replacement

Detailed summary below.

Goal: Build a deep-learning model, training data set and training scripts, and a run-time for detection and modification of the video stream

Hardware Skills: Ability to capture and display video streams

Software Skills: Python, TensorFlow, GStreamer, OpenCV

Possible Mentors: @jkridner, @lorforlinux

Expected size of project: 350 hour

Rating: medium

Upstream Repository: TBD

References:

- TBD

Project Overview

This idea proposal was aided by ChatGPT-4.

This project aims to develop an innovative system that uses neural networks for detecting and replacing commercials in video streams on BeagleBoard hardware. Leveraging the capabilities of BeagleBoard’s powerful processing units, the project will focus on creating a real-time, efficient solution that enhances media consumption experiences by seamlessly integrating custom audio streams during commercial breaks.

Objectives

- Develop a neural network model: Combine Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to analyze video and audio data, accurately identifying commercial segments within video streams.

- Implement a GStreamer plugin: Create a custom GStreamer plugin for BeagleBoard that utilizes the trained model to detect commercials in real-time and replace them with alternative content or obfuscate them, alongside replacing the audio with predefined streams.

- Optimize for BeagleBoard: Ensure the entire system is optimized for real-time performance on BeagleBoard hardware, taking into account its unique computational capabilities and constraints.

Technical Approach

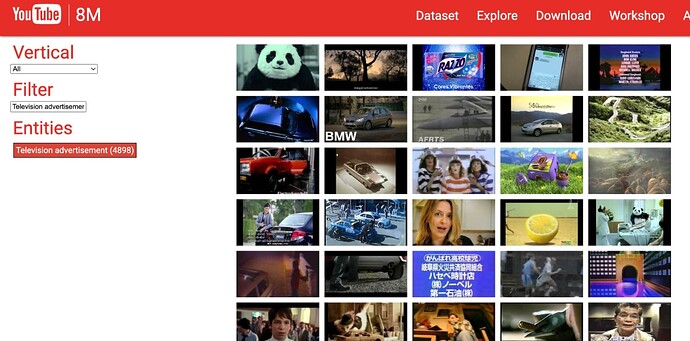

- Data Collection and Preparation: Use publicly available datasets to train the model, with manual marking of commercial segments to create a robust dataset.

- Model Training and Evaluation: Utilize TensorFlow to train and evaluate the CNN-RNN model, focusing on achieving high accuracy while maintaining efficiency for real-time processing.

- Integration with BeagleBoard: Develop and integrate the GStreamer plugin, ensuring seamless operation within the BeagleBoard ecosystem for live and recorded video streams.

Expected Outcomes

- A fully functional prototype capable of detecting and replacing commercials in video streams in real-time on BeagleBoard.

- A comprehensive documentation and guide on deploying and using the system, contributing to the BeagleBoard community’s knowledge base.

- Performance benchmarks and optimization strategies specific to running AI models on BeagleBoard hardware.

Potential Challenges

- Balancing model accuracy with the computational limitations of embedded systems like BeagleBoard.

- Ensuring real-time performance without significant latency in live video streams.

Community and Educational Benefits

This project will not only enhance the media consumption experience for users of BeagleBoard hardware but also serve as an educational resource on integrating AI and machine learning capabilities into embedded systems. It will provide valuable insights into:

- The practical challenges of deploying neural network models in resource-constrained environments.

- The development of custom GStreamer plugins for multimedia processing.

- Real-world applications of machine learning in enhancing digital media experiences.

Mentorship and Collaboration

Seeking mentorship from experts in the BeagleBoard community, especially those with experience in machine learning, multimedia processing, and embedded system optimization, to guide the project towards success.

When submitting this as a GSoC project proposal, ensure you clearly define your milestones, deliverables, and a timeline. Additionally, demonstrating any prior experience with machine learning, embedded systems, or multimedia processing can strengthen your proposal. Engaging with the BeagleBoard.org community through forums or mailing lists to discuss your idea and gather feedback before submission can also be beneficial.