Also, I have set up a Blog Website to document my progress, share my research, discuss the challenges I encounter and how I overcome them, and provide ‘how to’ guides. I’ll be posting biweekly blogs.

1 Like

Since the GSoC coding period started on May 27th, I am considering this a Pre-GSoC update. I’ve set up my blog website, completed the intro video presentation, and had it reviewed by my mentors. Additionally, I created code repositories on GitHub and GitLab. Due to my ongoing exams, I haven’t made much progress on the coding part. I will catch up on this after my exams conclude next week.

For the upcoming week, I plan to make and upload the intro video, start with commercial data acquisition, and set up my BeagleBone AI-64. Although I am unsure how much time I can dedicate in the upcoming week due to my exams, I will strive to accomplish as much as possible.

Week2 Updates:

- Dataset collection done

- Started with dataset preprocessing. (Merged Audio-Visual features)

- Week 0-1 Blog out - Link

Blockers:

While I was downloading the dataset in chunks and continuously updating the same file, the Jupyter notebook kernel restarted while processing the second-to-last chunk, which corrupted the file. As a result, I had to re-download the entire commercial features.

Next Week Goals:

- Complete dataset preprocessing.

- Begin writing the model code, starting with a simple model (SVM), as I already have audio-visual features.

- Implement a CI/CD pipeline to ensure the code is reproducible.

- Connect the Beaglebone AI-64 to the monitor and run demo models on it.

@Aryan_Nanda

- Set-top box

HDMI output to input of HDMI to USB converter connected to one of the USB ports of BeagleBone AI-64 or Laptop to record the training dataset.

- Upload the dataset to Google Collab Pro account storage and train your model.

- When you make the inference on BeagleBone AI-64, connect the set-top box or media player thingy

HDMI to input the HDMI to USB converter input and that to your BeagleBone AI-64 for inferencing. Use active miniDP to HDMI cable from your BeagleBone AI-64 and connect to a monitor to see the output generated by your model.

For 1st step, you can also use HDMI to CSI converter with BeagleBone AI-64 to record the dataset and for 3rd step use the same HDMI to CSI setup to get the video feed and connect active miniDP to HDMI with your HDMI to USB and that to your laptop so that you don’t need any additional monitor.

1 Like

The issue I’ve found with that is capturing the audio. I’ve got one of those converters and setting up the audio capture looks a bit painful. Audio capture will be easier using the USB drivers. With a superspeed HDMI to USB, I think there will be good bandwidth.

In that case, I can ship another HDMI to USB to @Aryan_Nanda so that he can use his laptop to monitor the output.

Minutes of Meeting(24-06-2024)

Attendees:

Key Points:

- Provided updates on the model: completed dataset preprocessing and merged audio-visual features. Currently working on generating uniform features and converting them to np.array() format for model input.

- Purchased a Google Colab Pro account and received reimbursement.

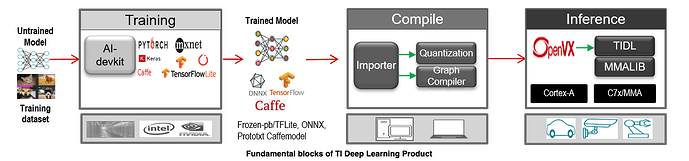

- @jkridner explained the quantization process and shared this reference link.

- Discussed the next steps for BeagleBone AI-64, starting with this guide.

- He also shared this webinar link on BeagleBone AI-64.

- Spent 30 minutes attempting to resolve the CI pipeline failure issue mentioned on Discord.

(All links pasted here for future reference)

Week 4 Updates:

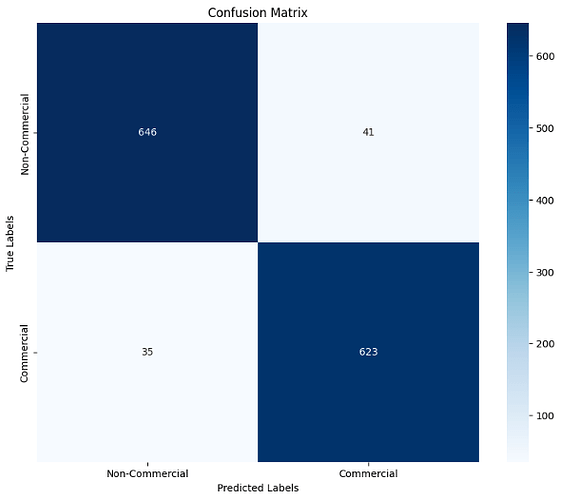

- Implemented unidirectional LSTMs, bidirectional LSTMs, CNNs, LSTM + CNN model.

- Trained different models and evaluated them.

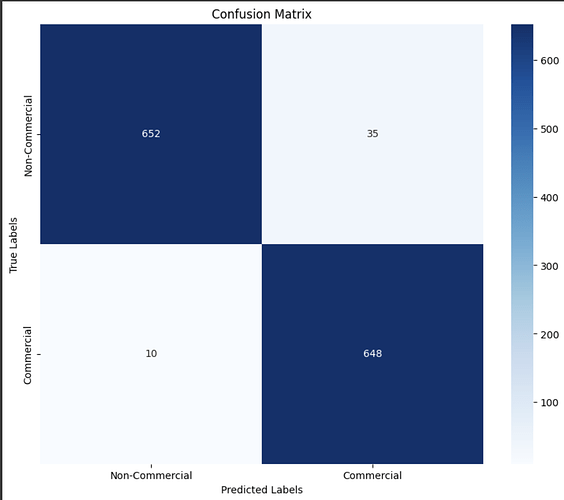

- Best accuracy on test data is given by bidirectional LSTMs (97.1%).

- Confusion Matrix of this model:

- Bought 500 more compute units in Google Colab.

- Created a Dockerfile and built an image that provides conda functionalities along with Cuda drivers and pushed it here. This image will be used as a base image in ci pipeline.

- Also, resolved last week’s ci pipeline failure error by adding few steps before running the main script.

Blockers

TensorFlow is unable to use the GPU in the CI pipeline because CuDNN is missing. I will add CuDNN to the Docker image with a new tag.

Next Weeks goals

- Generate model artifacts by referring to forum threads and Edge AI Tidl Tools.

- Import the trained model to BeagleBone AI-64 by refering to Import Custom Models guide.

- Running inferencing on BeagleBone AI-64.

- Getting into details of Edge AI TIDL Tools.(TI Model Zoo, Quantization process etc.)

- Train the transformers model.

Important Commits

Week 2-3 Blog out - Dataset Preprocessing

(This was missed in the weekly updates)