I haven’t gotten to the point in my current project where I’m working on GPIO, so I don’t yet have a self-contained sample or even definitive references. But I figure I can provide some general pointers if it’s helpful.

On hosted Linux, the BeagleBoard crew and/or TI has configured the appropriate driver to expose available ports via userspace APIs. What those drivers do internally is perform memory accesses which map in hardware to particular I/O configuration registers. E.g., logically, there’s a memory address somewhere which you can write a “1” to and it’ll set your chosen pin to a logic-level high, or a “0” and it’ll set it low. When you ask the kernel to set the pin high, it’s just writing to the appropriate memory address.

In practice, there’s some configuration the driver needs to do to tell the pin what mode to operate in – in this case, pure digital output. Other modes include a digital input, or a more specialized function like SPI or UART. Most pins have many alternate functions they can be configured for.

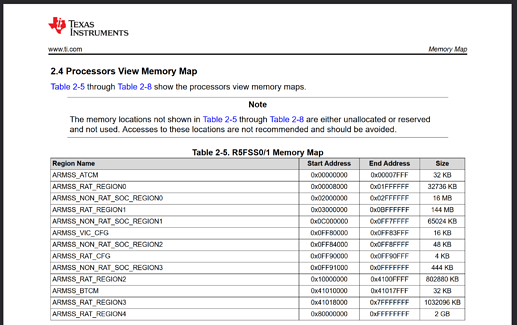

So, without the Linux kernel driver in place, you’ll have to do this “register-prodding” yourself. This means checking the chip’s documentation to understand which registers need to have their values changed and what those registers’ memory addresses are.

If you leave the relevant pins in the Linux device tree, the kernel might do you a favor and pre-configure the pins so that all you have to do is set their desired output value. I’d personally avoid the race condition that presents but it’s a possibility.

At a quick glance, section 12.1.2.4 of the Technical Reference Manual for the TDA4VM outlines the process of configuring a pin’s mode and setting its output state. There’s a different section which gives the actual memory addresses of the relevant registers. The process doesn’t look too bad so long as you identify the right register for your pin.

If you are looking for a simple high/low control, that’s all you need to do. If you want other types of peripherals like SPI or UART, there are other modes you’ll have to configure the pin for and more setup that has to be done. The TRM has information on that as well.

Hopefully in a week or two I’ll have at least some basic code samples that could help out here. I haven’t written for bare metal on TI processors either (only STM32s and AVRs), so I’m right there with you on deciphering the datasheets.